Building Ubuntu Server 22.04 on vSphere with Packer

While using my home lab, I regularly need a fresh Ubuntu Server to install test software. Rather than use the built in templating in vSphere, I wanted to use Packer to build an image so that I can include the regular software I use and have it always be up to date when I start using the server.

I initially built the template using JSON, but since HCL is now the preferred language, I converted the template to HCL and used some new features like dynamic blocks. I’ve parameterised all of the vSphere values and included some values to be injected into the VM such as username, initial user password and some ssh keys in authorized_keys for the user.

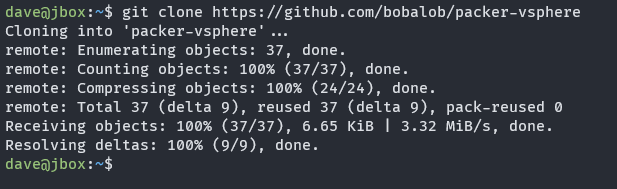

If you want to follow along, clone the git repo…

git clone https://github.com/bobalob/packer-vsphere

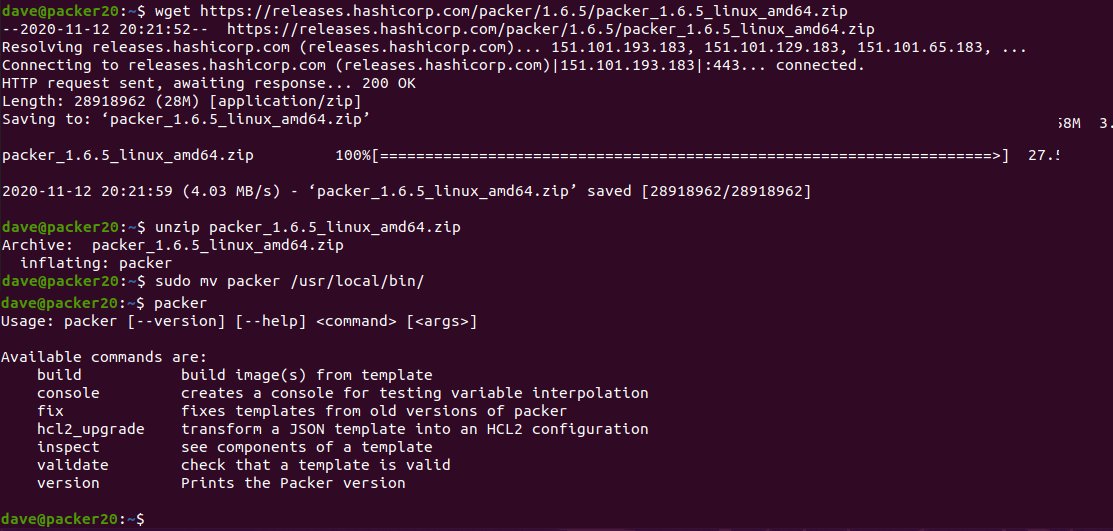

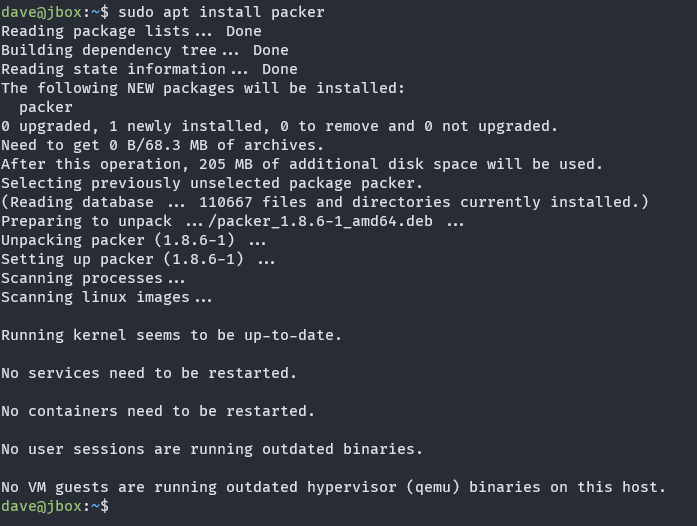

…and install packer. if this isn’t available on your distribution, download from hashicorp

sudo apt install packer

Here’s the main template. The source section defines the virtual machine ready to boot in vSphere and the build section defines what will happen after the OS has been installed.

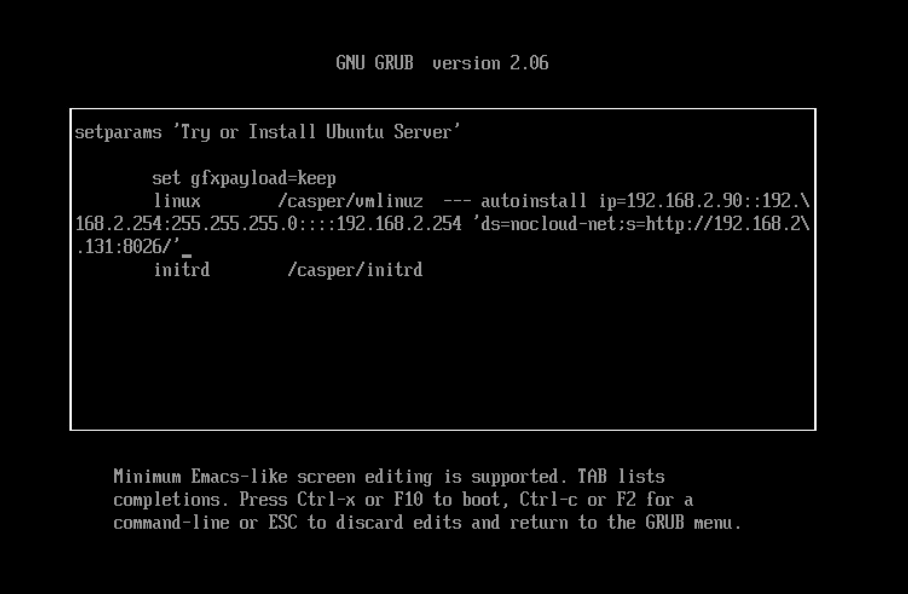

The boot_command sends keys to the VM via the remote console and essentially types a boot command into the grub boot menu. This instructs the machine to load the user-data file from packer’s in built dynamic HTTP server. The user-data file has some basic information for subiquity (Ubuntu Server’s setup program) to install the OS.

The dynamic provisioner block is interesting since it iterates over the ssh_authorized_keys list variable and runs the echo | tee command multiple times inside the new VM.

Some of the variables in the user-data file are modified by build.sh prior to packer being executed, which I’ll show a bit later.

The template uses variables for most user input so it can be used in other environments

To provide user variables, a file named variables.auto.pkrvars.hcl can be placed in the files/ directory. SSH public keys can be placed in the ssh_authorized_keys variable which will be injected into the VM in the build step. An example variables.auto.pkrvars.hcl file:

temp_dns = "192.168.2.254"

temp_gw = "192.168.2.254"

temp_ip = "192.168.2.90"

temp_mask = "255.255.255.0"

vcenter_cluster = "mycluster.domain.local"

vcenter_datacenter = "Home"

vcenter_datastore = "Datastore1"

vcenter_iso_path = "[Datastore1] ISO/ubuntu-22.04.1-live-server-amd64.iso"

vcenter_network = "VM network"

vcenter_server = "vcenter.domain.local"

vcenter_user = "administrator@vsphere.local"

ssh_authorized_keys = [

"ssh-rsa AAAAB3NzaC1... user@box1",

"ssh-rsa AAAAB3NzaC1... user@box2"

]

The temporary IP is because in my environment, the machine would get a different DHCP assigned address after the first reboot and packer would not reconnect to the new IP.

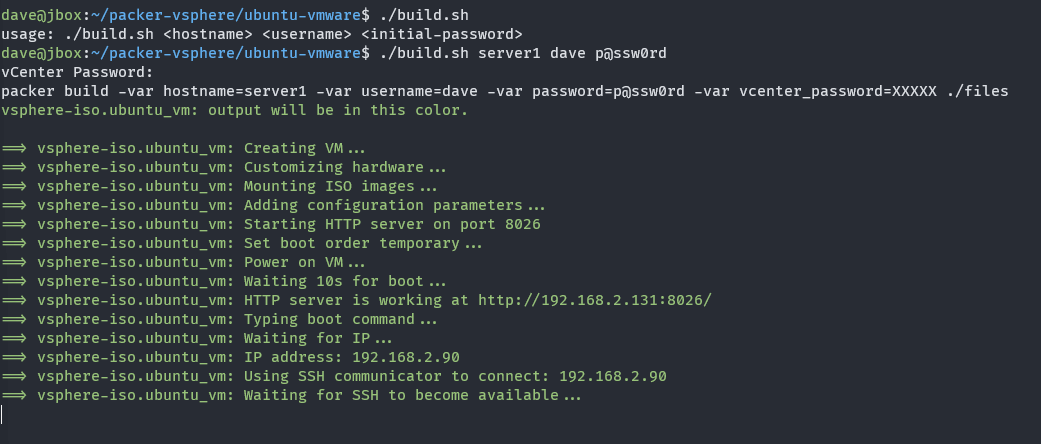

Running build.sh modifies some files in the user-data file that Ubuntu reads during setup. openssl generates a password hash for the user supplied password and there is a read command for the vCenter password so it’s not entered on the command line or echoed to the screen.

And finally, here’s the provision.sh script that runs inside the VM once it’s built. The provision script just does an apt update/upgrade, cleans up the cloud-init configuration from the installer, expires the user’s password and resets it’s sudo configuration.

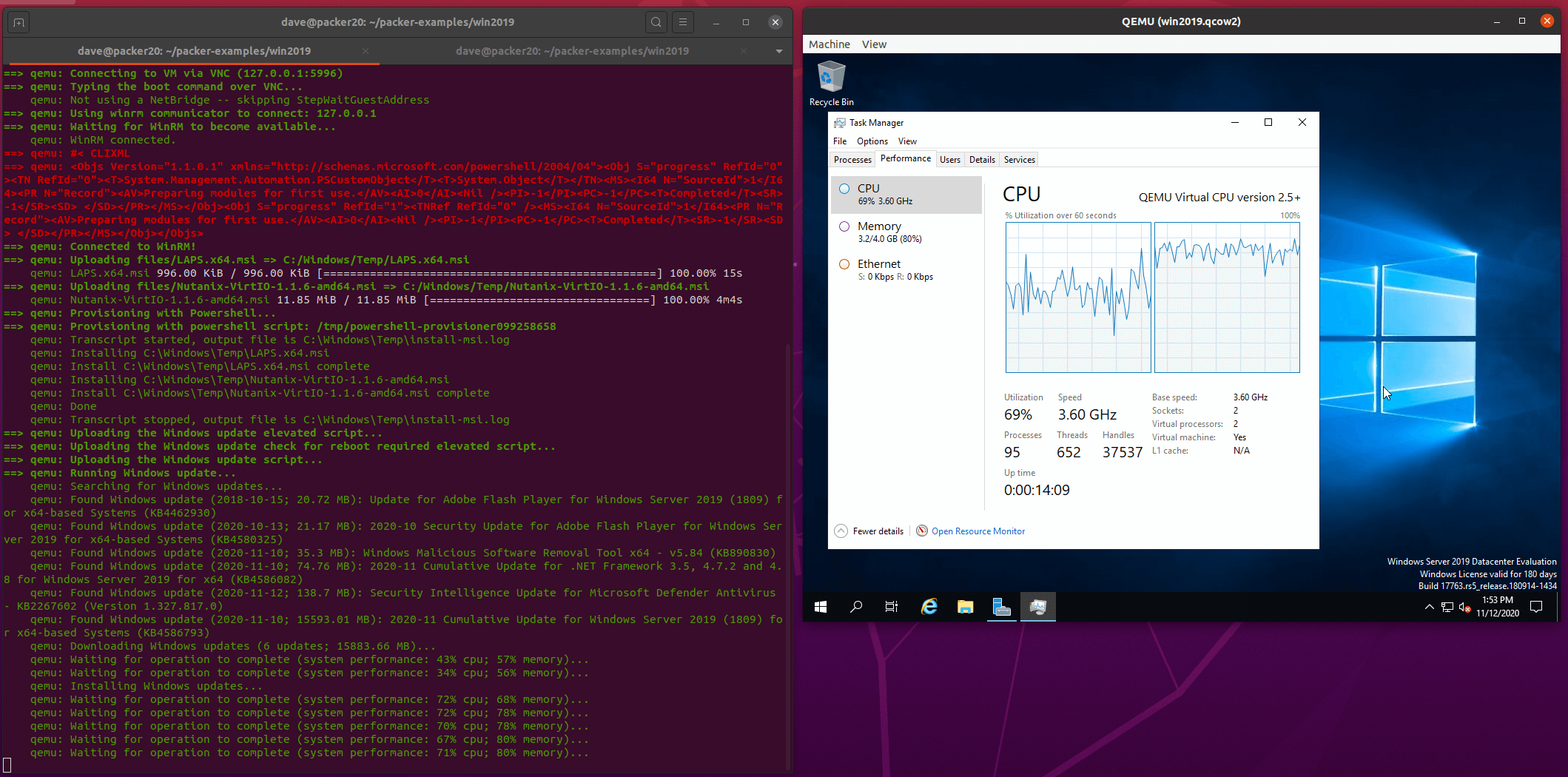

Building the machine

Running build.sh

packer typing the boot command

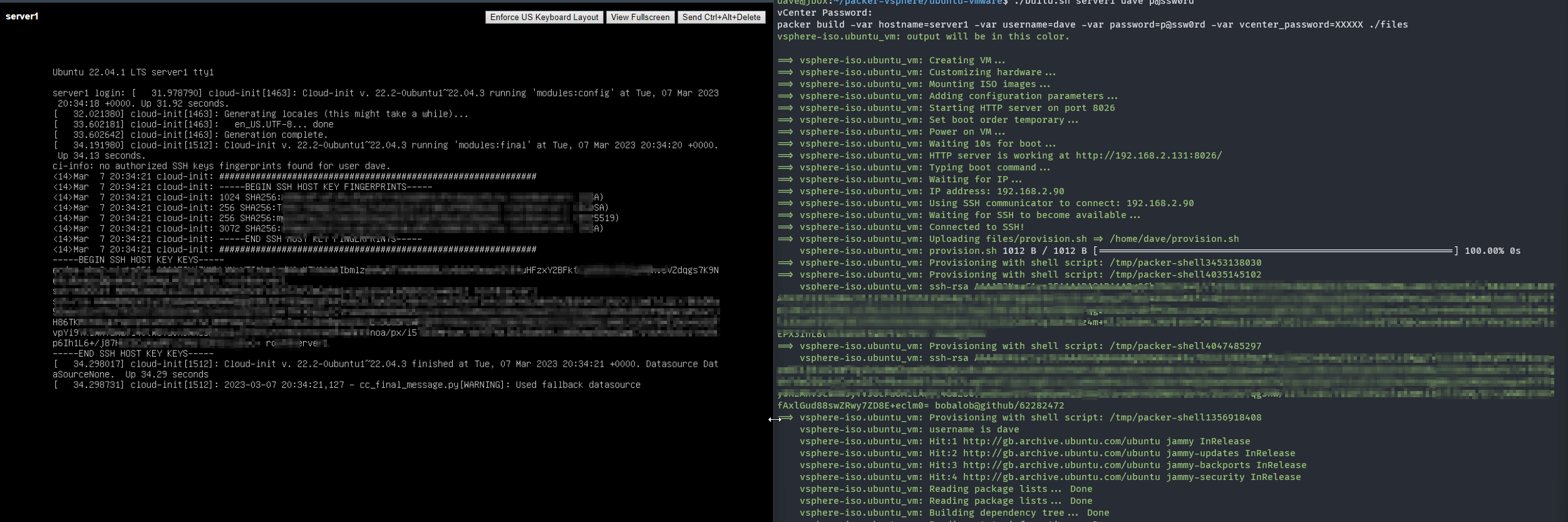

build section of the template running. Notice that the ssh-rsa public keys are echoed twice, this is because of the dynamic block in the build section of the template iterating over the list of keys provided in the variable.

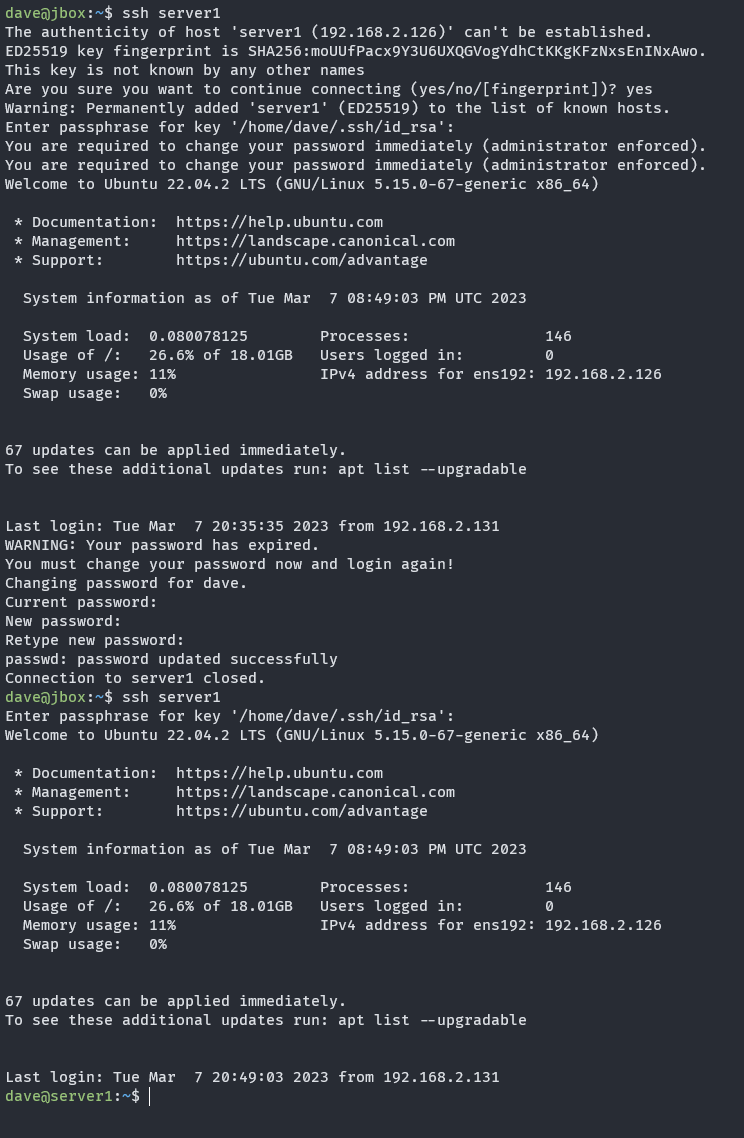

Once the machine is built, login with my ssh key is possible. Since provision.sh expired the user password, we’re prompted to change from the initial password at first login.

I already have Ansible set up in my home lab, so I’ll try to integrate that into this build in the future.

Written with StackEdit.