AHV Running as a Hyper-V Guest VM

Want to test out Nutanix Community Edition but don’t have the hardware handy? If you have a decent Hyper-V host then it’s possible to install CE inside a guest VM in Hyper-V.

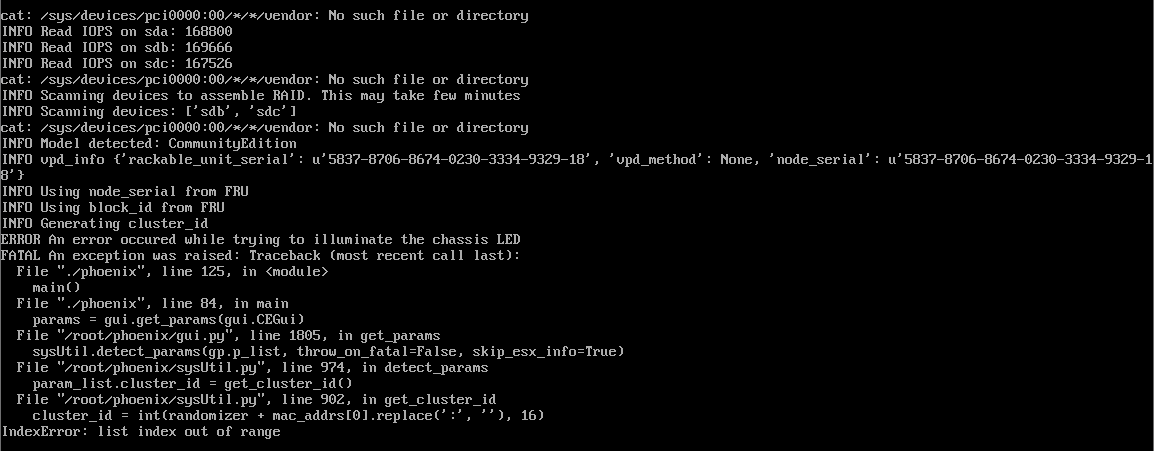

Unfortunately the ISO you can download direct from Nutanix fails when checking for network interfaces during the install. Attempting to install straight from the ISO with regular or legacy NICs results in the following error:

FATAL An exception was raised: Traceback (most recent call last):

File "./phoenix", line 125, in <module>

main()

File "./phoenix", line 84, in main

params = gui.get_params(gui.CEGui)

File "/root/phoenix/gui.py", line 1805, in get_params

sysUtil.detect_params(gp.p_list, throw_on_fatal=False, skip_esx_info=True)

File "/root/phoenix/sysUtil.py", line 974, in detect_params

param_list.cluster_id = get_cluster_id()

File "/root/phoenix/sysUtil.py", line 974, in get_cluster_id

cluster_id = int(randomizer + mac_addrs[0].replace(':',''), 16)

IndexError: list index out of range

It is however possible to modify the installer so it can detect Hyper-V guest network interfaces and successfully install and start a new single node cluster.

The requirements for the guest VM are not insignificant, so you’ll need the following.

VM Specification

- Generation 1 VM (BIOS Boot)

- 4+ vCPU Cores (I have tested with 8)

- 22 GB+ RAM, Statically Assigned

- 3 Dynamically Expanding VHDs attached to IDE interface

- 32 GB AHV Boot Disk

- 256 GB CVM & Data (Must be SSD backed)

- 512 GB Data Disk

- Nested Virtualisation enabled on the VM

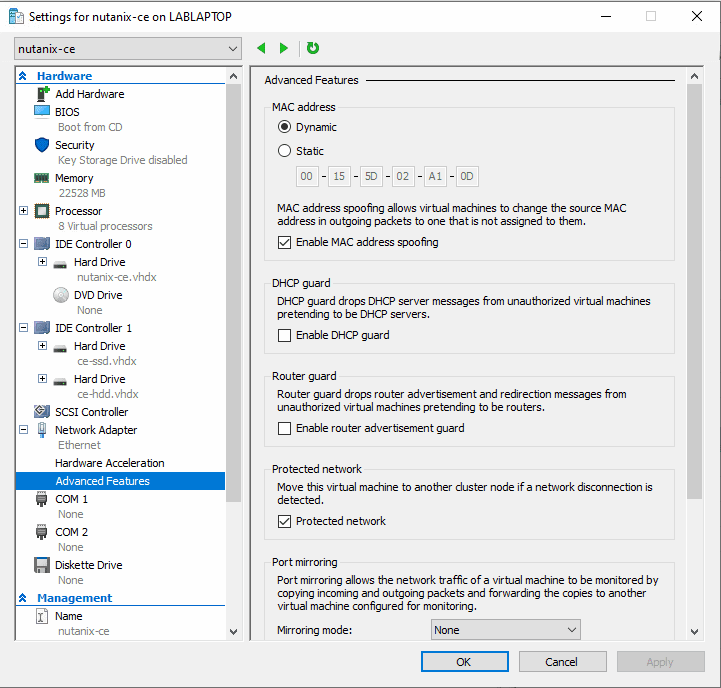

- At least one NIC, enable MAC address spoofing so that the CVM and guest VMs can get out to the network.

Start by downloading the ce-2020.09.16.iso from the Nutanix Community Edition forum (Requires Registration.)

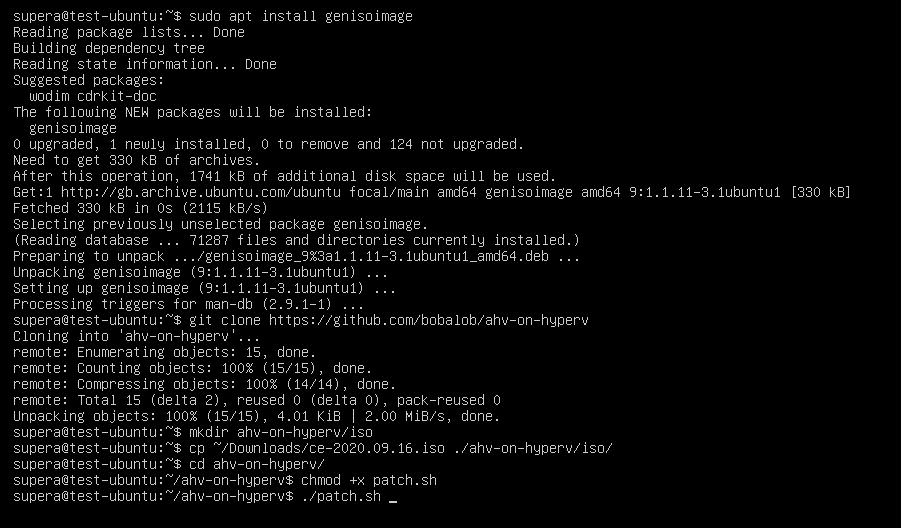

Patch the iso using the script. I used a fresh, temporary Ubuntu 20.04 server VM to patch the iso. It’s possible this script would work in WSL but I haven’t tested that. The script just modifies a few lines in some of the setup python scripts. You may be able to do this manually but it requires unpacking and repacking the initrd file on the ISO in a very specific way.

This is an alpha grade script, so use at your own risk. I created an Ubuntu Server 20.04 temporary VM and copied the iso into the VM.

The script has some pre-requesites to install.

sudo apt install genisoimage

Then copy your downloaded ce-2020.09.16.iso file to the iso directory and run the script.

git clone https://github.com/bobalob/ahv-on-hyperv

mkdir ./ahv-on-hyperv/iso

cp ~/Downloads/ce-2020.09.16.iso ./ahv-on-hyperv/iso/

cd ahv-on-hyperv/

chhmod +x patch.sh

./patch.sh

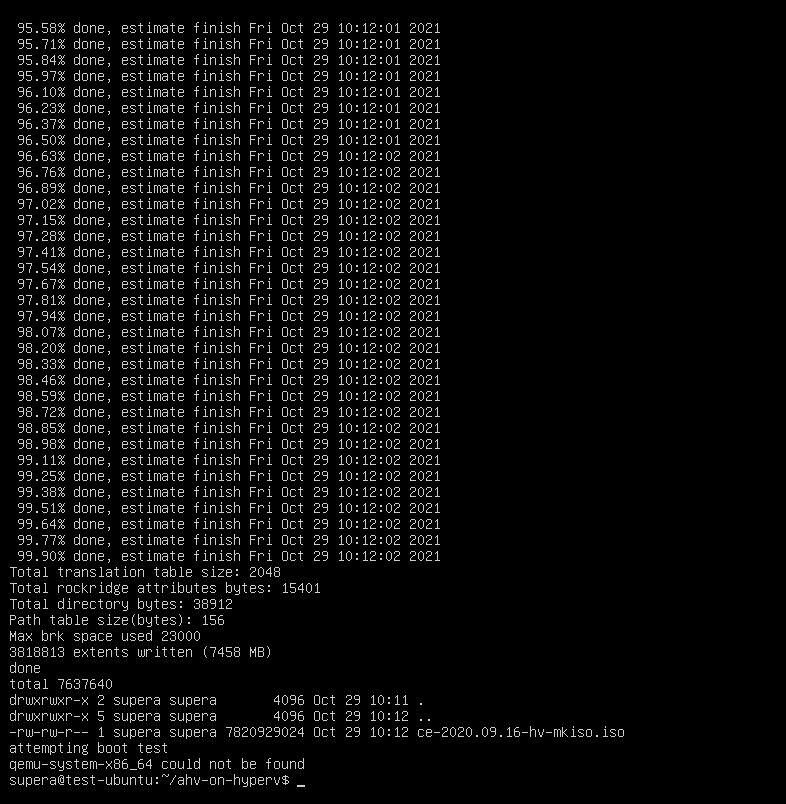

Once finished it should look a bit like this.

If your Ubuntu machine has KVM/QEMU installed it will boot the ISO, this is expected to fail as there are no disks attached. You can safely stop the VM. Once patched copy the new ce-2020.09.16-hv-mkiso.iso from your Ubuntu machine to your Hyper-V host.

Create a new virtual machine with the above specification, then enable nested virtualisation with the following command

Set-VMProcessor -VMName <VMName> -ExposeVirtualizationExtensions $true

Attach the patched ISO ce-2020.09.16-hv-mkiso.iso and boot the VM.

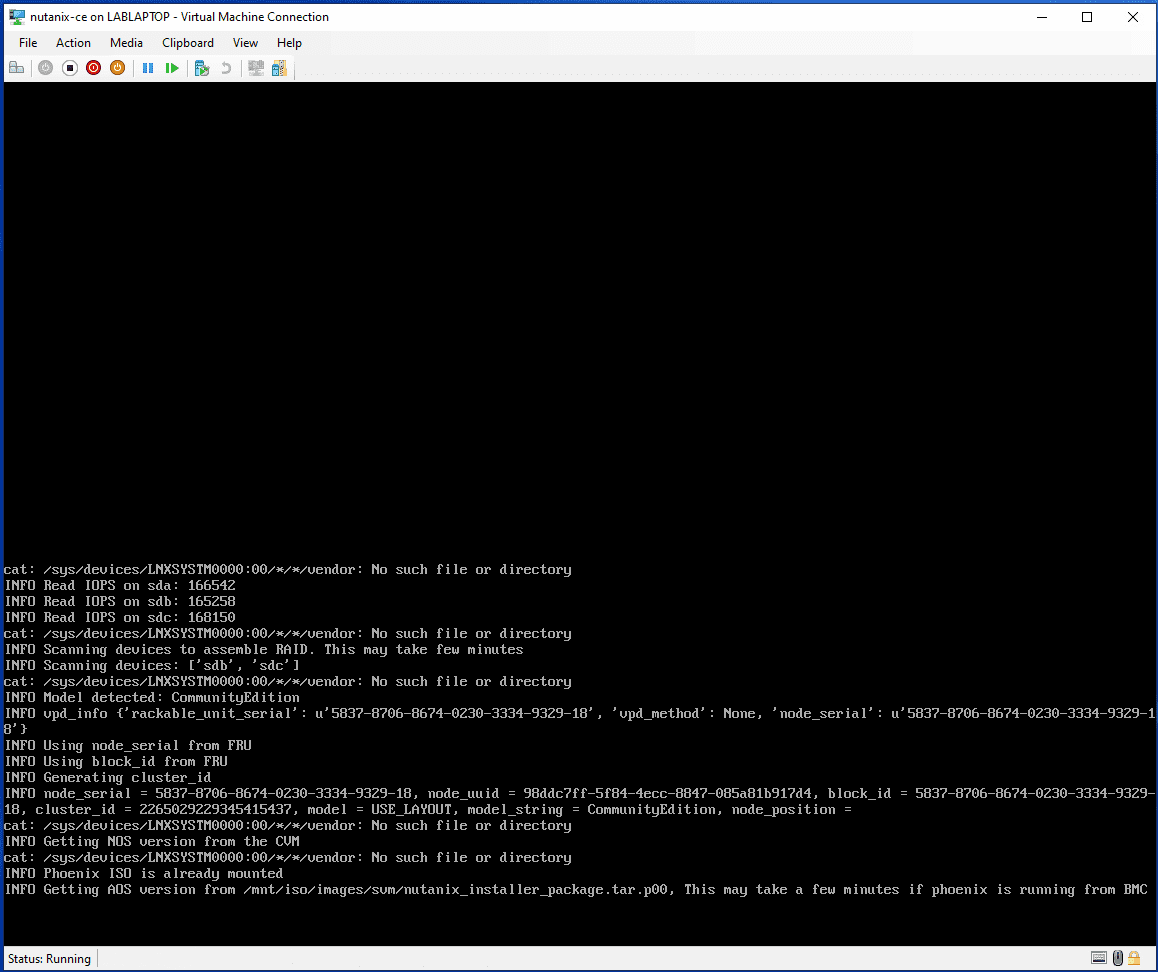

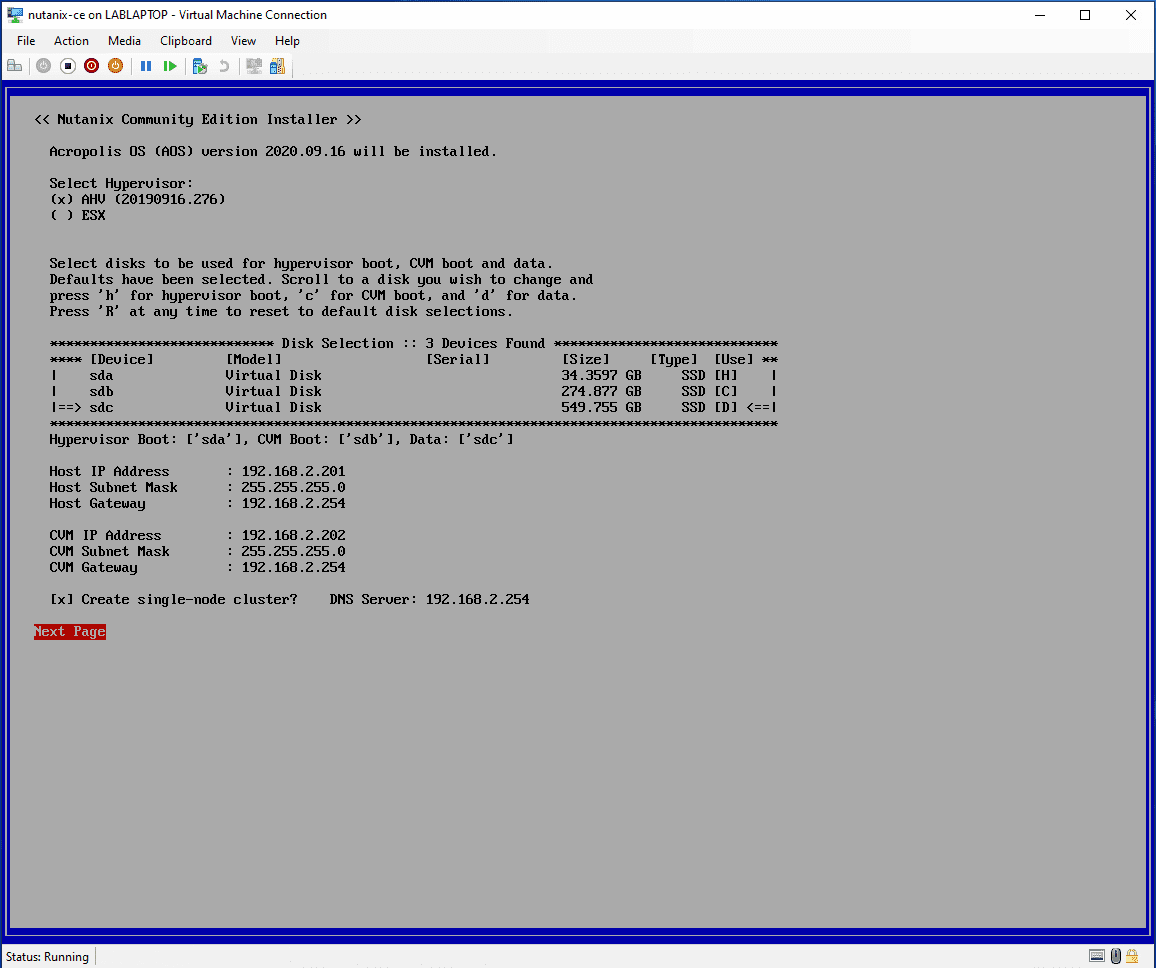

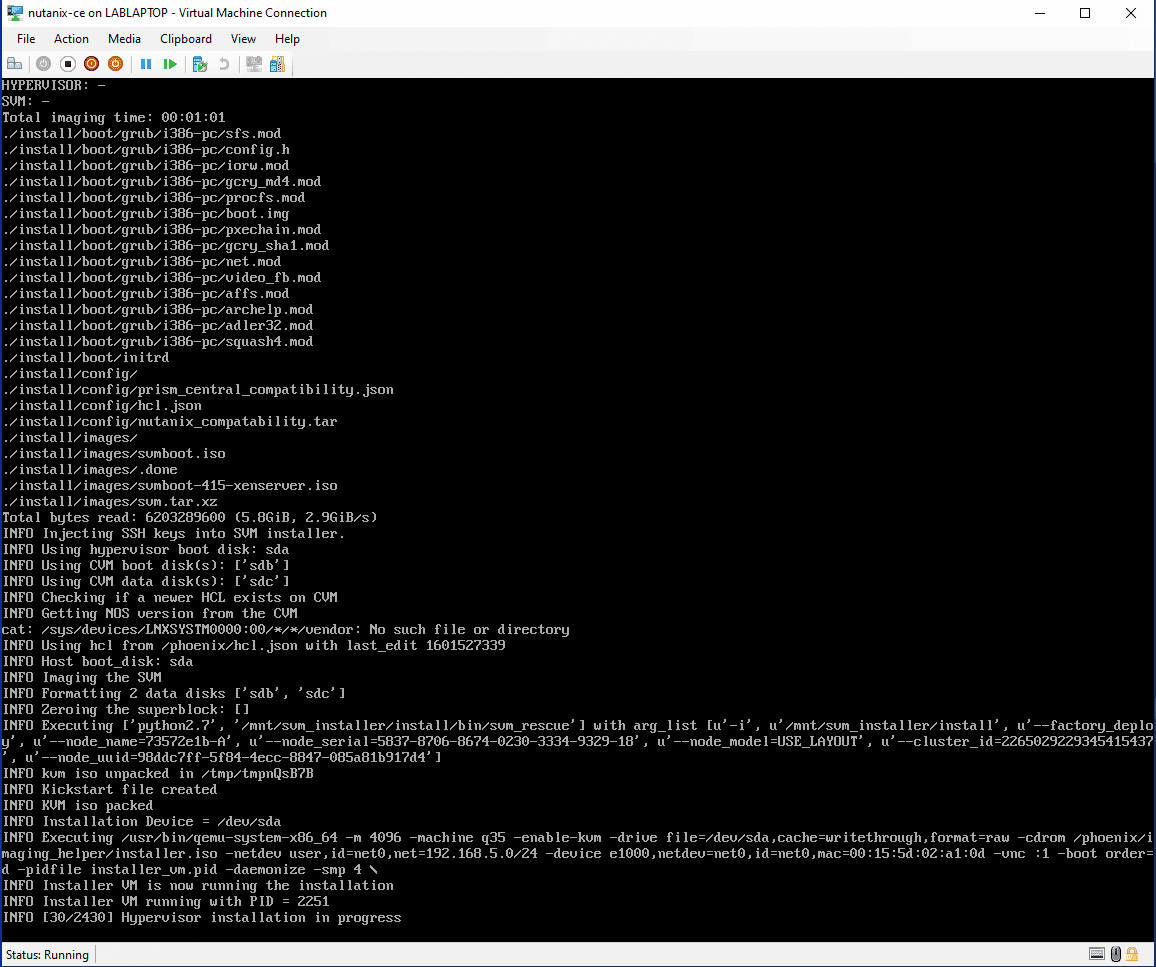

Installation

Follow the normal path to install

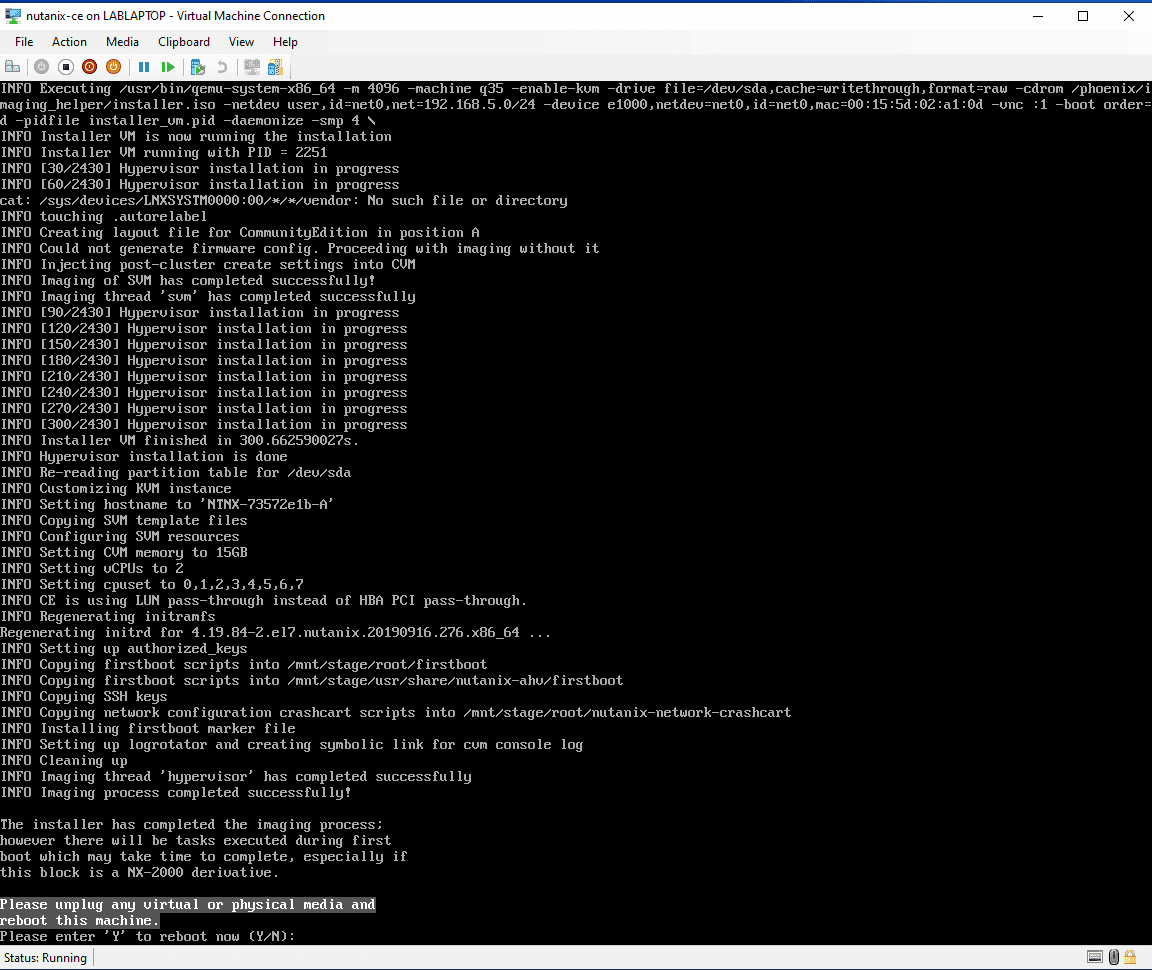

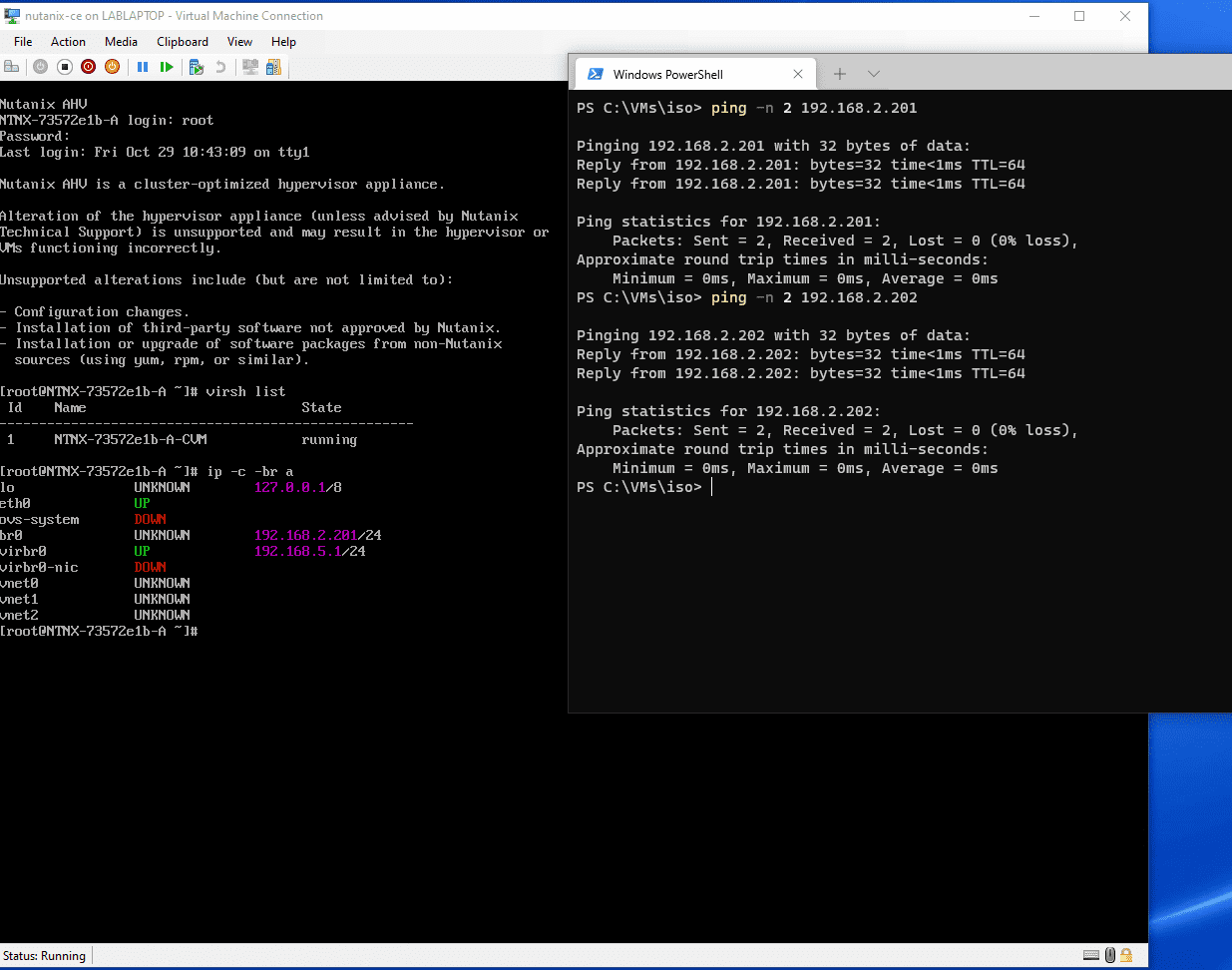

Install Complete

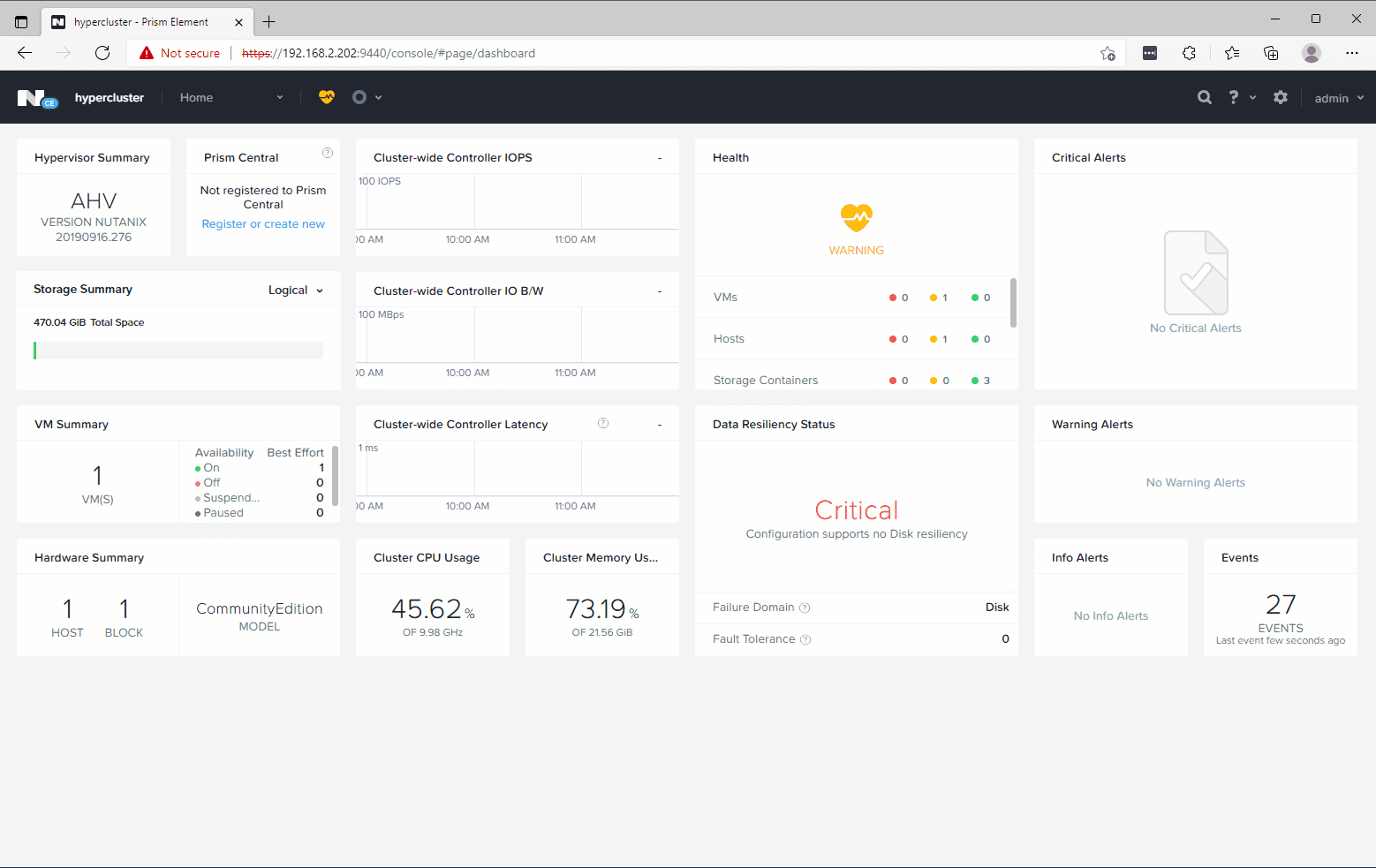

Prism Running!

Remove the ISO from the VM and reboot when told; give it 15-20 minutes to start up. Enjoy your new dev AHV/AOS installation.

Written with StackEdit.

This also works for 6.5 if you just rename the ISO file.

ReplyDeleteAlthough this works with 6.5.2 to patch the ISO and successfully run the install, upon completion no CVM is created, and no network adapters are created. Cannot do anything other than logon.

DeleteIt's because the kernel modules for the network adapter aren't there when you finally boot into AHV. Interestingly the NIC is available in the phoenix installer so ESXi might be possible.

DeleteDo you know of a resolution to this?

DeleteI did manage to get AHV to boot with a Nic active by replacing the kernel in a chroot with a standard centos one after installation. Once booted though AHV can't start any user vms as it complains about monitor CPU feature not being present. I think it's probably a list cause (at least on AMD)

DeleteHi, i manage to run the script with WSL ;) but line by line manually.

ReplyDeletebut same problem as above, no eth0